Genetic algorithms

What is the problem?

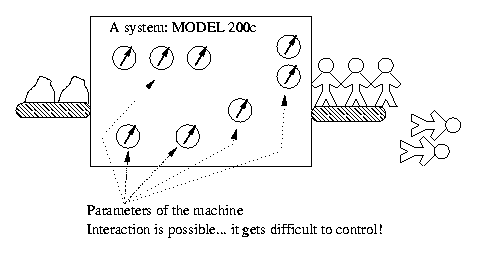

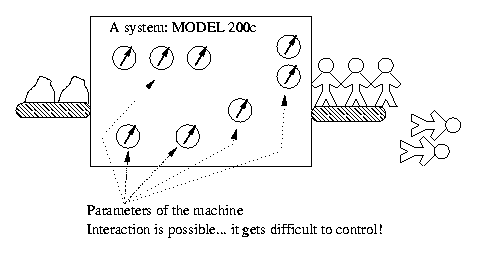

Suppose you have a system which you want to control. The reason for this

could be to achieve better performance for instance. In the situation depicted

here one may want to adjust the parameters of the system in order to produces

more little man . Of course for real life applications a lot of

factors have to be taken into account. If you increase the speed of the

conveyer belt for instance you will be required to make some change to

other parameters as well. And maybe this will influence some part of the

production process in ways that are difficult to predict. And so you may

end up having little men with different shapes because of the interactions

that specific to that very machine. This can be summarized by saying that

there are some non-linearities in the system which are very difficult to

model and even more to compute. So why try to model what the machine will

do? You can do it for small systems but what about real big ones? (Note

that the system can be a game, computer program or a machine.)

The idea: evolution

Instead of modelling the system why not try different solutions on it?

This of course implies that it is indeed save to submit the system to a

series of experiments. Just try different set of parameters and

see how well they perform. This allows you to determine the fitness

of a solution. Based on the fitness you can retain some of the best solutions

and throw away the bad ones. In this way you introduce some selection

which will help you improve you solutions.

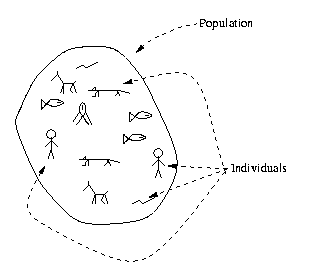

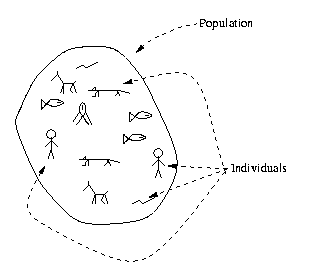

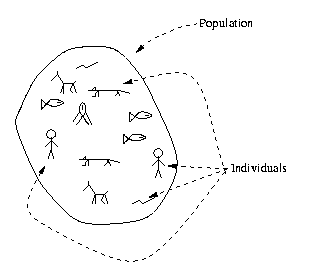

But before going any further it is important to introduce the term individual.

Until now this has been call a solution but individual is term used in

the literature. So, one such individual can be viewed as consisting of

2 elements: its GENE and its fitness. The gene being in the

example given above the different parameters of the system. A collection

of individuals is generally called a population. A population will

change over time (see later) and will consist of different generations

of individuals.

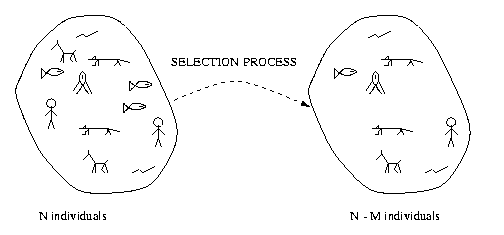

Let us resume at this point using the brief terminology introduced above.

You take a population (a collection of individuals) and determine the fitness

of each individual. In a next step you use a policy to retain some of the

solution (usually the best ones). Since some individuals where discarded

you need to introduce new ones in the population. This is done by using

genetic

operators. These are applied on the remaining individuals in the hope

the improve the population (that how we improve). We have a new population

(or partly at least) we can restart the cycle.

By using both a selection process and genetic operators bad individuals

are removed and new ones are generated with possibly greater potential.

Does it always work?

Hmm ... not with me :-{ Although genetic algorithms can indeed find good

solution and even surprising ones it is still no magic.

Important factors are the evaluation for example. If the function is

to strict for instance the likely hood of success it remote. If your evaluation

function (to look at it from the programming side) computes finesses in

a rather bimodal way (that is to say: or you are good or you are

real bad) then the fitness would give a bad indication about the quality

of an individual. This makes it very hard to compare good ones with each

other for instance.

Another factor is the size of the population. Of course the workload

is affected by this choice but the quality and the speed of the genetic

algorithm as well. To put it simple you can give more space for a good

solution to appear early if you use a big population. But then again there

is no guarantee about that either.

Yet other important parameters are related to the choice (probabilities)

of the genetic operators and the selection criterion used.

Phases

The number of step of genetic algorithm in its simplest form are fairly

small:

-

Generate a random population to start with (the first generation)

-

Evaluate the entire population

-

Apply the selection criterion

-

Use evolution to create new individuals (next generation)

-

Restart at step 2

Initial random population

This is quite obvious I guess fill your generation with randomly generated

individuals.

Note: the fact that this is random is important as the genetic

diversity will allow new solution to emerge.

Evaluation

This step is depends on the problem at hand. As the individual is of course

evaluate within the context of the problem we try to solve/optimize. The

fitness of each individual is determined in this step.

Selection

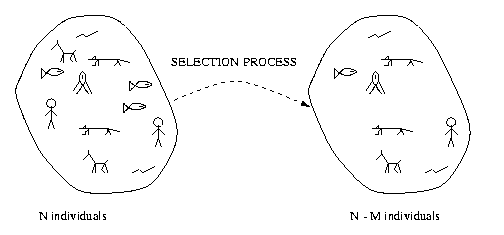

At this point it is possible to choose those individuals who are performing

well for what we want to achieve. All the other ones are not suitable and

will be discarded here. As a result we end up with a smaller population.

Different criterion can be used. It is of course a choice the as to be

made by the programmer.

-

By far the easiest approach is to used a TOP N policy. At each selection

step only the N best individuals will survive.

-

Another approach is to set a threshold for the fitness. Every individual

with a fitness higher than the threshold will remain.

-

Another approach is the used of tournaments. Two individual are chosen

at random and compete again each other. The one with the highest fitness

wins for example.

-

Yet another approach is roulette selection. Many variations can be proposed.

Genetic operators

In this step we use the better individuals to repopulate our genetic pool.

We can do this using genetic operators which work at the gene level. The

operation performed are combination, modification and duplication. The

selection of the different genetic operations usually happens at random.

But in order to steer the genetic algorithm probabilities are introduced

in the process. (Like 85% probability for one operator, 10% for another

etc..)

-

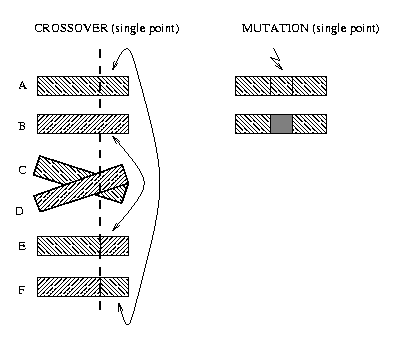

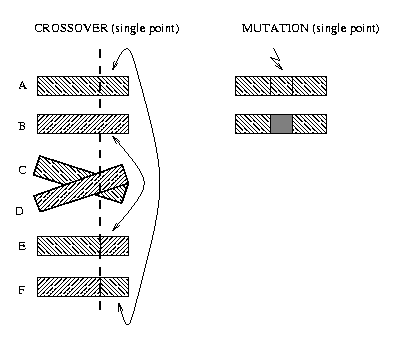

Crossover: combines the gene of two individuals by splitting them at a

randomly chosen point and interchanging the segments. Since this process

creates 2 new genes one usually chooses one of them at random.

-

Mutation: here a gene of individual is simply duplicated but an error is

introduced on purpose in the process. Mutation are the major source of

surprising

results. A mutant can indeed turn out to perform quite well compared

to others. And if not it will be illuminated quite naturally at the selection

step.

-

Copy: this pure duplication, hereby the influence of a solution in the

population increases. This fosters the chances of survival of the individual's

gene.

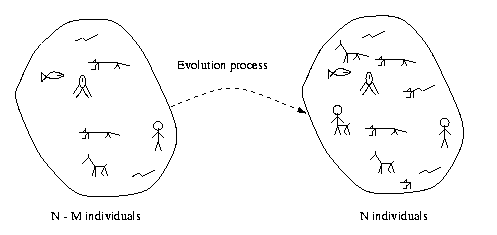

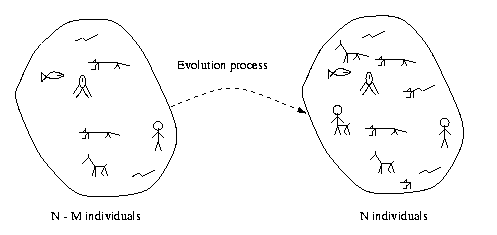

The new individuals are thus combinations or mutations of the individuals

that were retained by the selection process. An attempt has been done to

illustrate this. One can see that in the new population a centaur

appeared (combination of horse and man). Some of the new

individuals will indeed turn out to be better some not but this is of no

concern at this point.

When to stop?

How many times do we have to cycle trough the entire process? Well there

are 2 situations one can distinguish:

-

The problem we try to solve has an optimal solution and we found

it. In that case it is quite obvious that there is not reason to continue.

One can of course define optimal in different ways. (For example

when the error is small the epsilon)

-

In the other case we will have to settle for a good solution, that

is a solution that can be considered not satisfactory for what we want

to achieve. Usually this means we limit the number of generations. The

genetic algorithm will be stop after a certain number of cycles and the

best individual will be used.

Document was written by Parent Johan (January 2000)